Running SSL API with Multi-node local Kubernetes Cluster

Tue Mar 29 20228 min read

The pain of local development with Kubernetes

Personally I love Kubernetes. It has solved so many problems that used to haunt development teams going to production and keep production up and running.

But one major pain point with Kubernetes has always been local development. There is no way to test fully fledged cluster setup and how your application behaves in the distributed environment till you deploy it to an actual cluster.

There are many platforms that offer you Kubernetes clusters to play with. For example: Katacoda, Play with Kubernetes, Minikube etc. Or else you can go with GKE(google), EKS(amazon) etc.

But as far as I found, there are limitations with those options. Either these clusters/environments are temporary(Katacoda, Play with Kubernetes etc) or you get only a single node cluster(Minikube) or you will have to pay for what you consume(GKE, EKS etc).

What if, we can set up a highly available Kuberenetes cluster locally for our development and testing purposes ? Which is permanent and also it doesn’t cost you a single penny. Sounds great ? Furthermore , if the cluster setup process is simple and straight forward?

Introducing KinD

As per the official definition for KinD (Kubernetes in Docker) -

kind is a tool for running local Kubernetes clusters using Docker container “nodes”. kind was primarily designed for testing Kubernetes itself, but may be used for local development or CI.

Installing KinD

There are multiple ways to install KinD, but I prefer to install using Go Modules.

Make sure you have Go(1.17+) and Docker installed

go get sigs.k8s.io/kindThis should add KinD in your PATH.

Creating the Cluster

We will start with a simple cluster, with one Control Pane node and 2 Worker nodes.

Start by creating a config file - kind-config.yaml

You can find the file in the GitHub repo

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

- role: workerCreating the cluster simple, run the command

kind create cluster --config kind-config.yamlVerify the cluster is running as expected.

kubectl get nodesBuilding the API

We are gonna build a simple Express Server which listens on / and returns back the hostname

const express = require('express')

const os = require('os')

const app = express()

const port = 8080

app.get('/', (req, res) => {

res.send(`You've hit ${os.hostname()} \n`)

})

app.listen(port, () => {

console.log(`Express app listening on port ${port}`)

})Create the Docker file for the API container.

FROM node:16-alpine

WORKDIR /express-app

COPY . .

RUN npm install

EXPOSE 8080

ENTRYPOINT ["node", "app.js"]All code is under the api folder in the GitHub repo

Introducing Envoy Proxy

Envoy is an L7 proxy and communication bus designed for large modern service oriented architectures (from official documentation)

Why use Proxy for SSL

It’s obvious that if you implement TLS support within the Node.js application itself, the application will consume less computing resources and have lower latency because no additional network hop is required, but adding the Envoy proxy could be a faster and easier solution.

It also provides a good starting point from which you can add many other features provided by Envoy that you would probably never implement in the application code itself.

Refer to the Envoy proxy documentation to learn more.

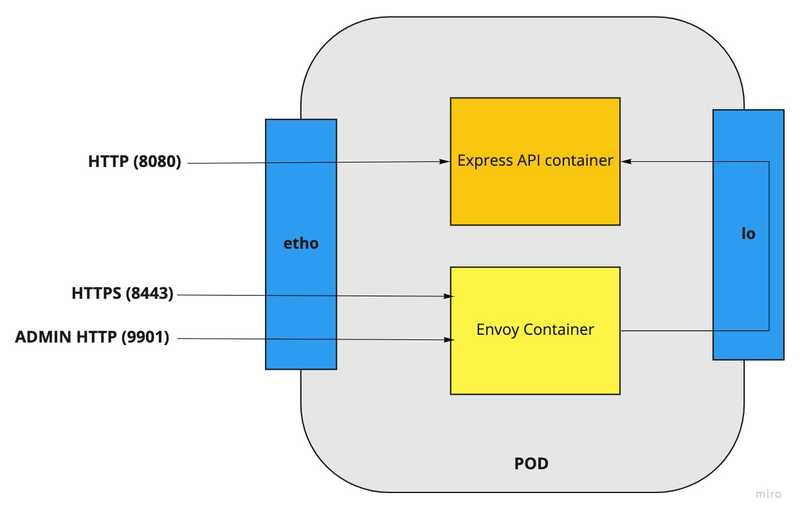

Proposed Pod Architecture

Express API container will bind on port 8080 on both network interfaces (eth0 and loopback)

The Envoy proxy handles https on port 8443. It will also expose an admin interface on port 9901.

The Envoy proxy sends HTTPrequests to Express API through the pod’s loopback address.

Setting up Envoy container

The files for Envoy container are available under ssl folder in the GitHub repo

We start by generating self-signed certificates for SSL. For the demo purpose we will create certificates for example.com.

Next we have to create a config file for Envoy. We will name this as envoy.yaml.

Start by defining the admin port

admin:

access_log_path: /tmp/envoy.admin.log

address:

socket_address:

protocol: TCP

address: 0.0.0.0

port_value: 9901Next we define the source for api

clusters:

- name: service_express_localhost

connect_timeout: 0.25s

type: STATIC

load_assignment:

cluster_name: service_express_localhost

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8080Finally, define the listeners for binding the https port

Final yaml looks like this

admin:

access_log_path: /tmp/envoy.admin.log

address:

socket_address:

protocol: TCP

address: 0.0.0.0

port_value: 9901

static_resources:

listeners:

- name: listener_0

address:

socket_address:

address: 0.0.0.0

port_value: 8443

filter_chains:

- transport_socket:

name: envoy.transport_sockets.tls

typed_config:

"@type": type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.DownstreamTlsContext

common_tls_context:

tls_certificates:

- certificate_chain:

filename: "/etc/certs/example-com.crt"

private_key:

filename: "/etc/certs/example-com.key"

filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.config.filter.network.http_connection_manager.v2.HttpConnectionManager

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: service_express_localhost

http_filters:

- name: envoy.filters.http.router

clusters:

- name: service_express_localhost

connect_timeout: 0.25s

type: STATIC

load_assignment:

cluster_name: service_express_localhost

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8080Finally, we define the DockerFile for the container

FROM envoyproxy/envoy:v1.14.1

COPY envoy.yaml /etc/envoy/envoy.yaml

COPY example-com.crt /etc/certs/example-com.crt

COPY example-com.key /etc/certs/example-com.keyPreparing Container Images

Now we have done our setup, next step is to build and push the images.

makeThe makefile should take care of building and pushing the images.

To make sure the containers are working as expected, try running the containers

Express API container

docker run --rm -p 8080:8080 deboroy/simple-express-apiThis should start the container. To verify it is working, try curling the url.

curl -s http://localhost:8080/You should see something similar to this

You've hit c1872db22f92This means the Express API container is working perfectly.

Envoy Proxy Container

Now we can start the Envoy container, but at present it will throw an error as the loopback is not bound to the API container.

docker run --rm -p 8443:8443 deboroy/envoy-ssl-proxyNow if we curl the https url, we should see an error.

curl -s https://localhost:8443 --insecureError will look something like this -

upstream connect error or disconnect/reset before headers. reset reason: connection failure%This means that proxy container is running and listening on port 8443 but it is not able to connect to the source. Let’s fix this by deploying our pod.

Deploying Containers on Pods

Now lets create a pod description as per our agreed design. Let’s name the file as pod.express-ssl.yaml.

apiVersion: v1

kind: Pod

metadata:

name: express-ssl

spec:

containers:

- name: express-api

image: deboroy/simple-express-api

ports:

- name: http

containerPort: 8080

- name: envoy

image: deboroy/envoy-ssl-proxy

ports:

- name: https

containerPort: 8443

- name: admin

containerPort: 9901The description is pretty straight forward. We are running the Express Container in our pod, with the Envoy Proxy as the sidecar.

Verify that the KinD Cluster is up and running

kubectl cluster-info --context kind-kindNext, create the pod

kubectl apply -f pod.express-ssl.yamlVerify the pod is created

kubectl get po -wNAME READY STATUS RESTARTS AGE

express-ssl 0/2 ContainerCreating 0 5s

express-ssl 2/2 Running 0 22skubectl describe po express-sslYou should see that the containers are started in the events

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 35s default-scheduler Successfully assigned default/express-ssl to kind-worker2

Normal Pulling 35s kubelet Pulling image "deboroy/simple-express-api"

Normal Pulled 26s kubelet Successfully pulled image "deboroy/simple-express-api" in 9.1613133s

Normal Created 25s kubelet Created container express-api

Normal Started 25s kubelet Started container express-api

Normal Pulling 25s kubelet Pulling image "deboroy/envoy-ssl-proxy"

Normal Pulled 14s kubelet Successfully pulled image "deboroy/envoy-ssl-proxy" in 11.1944752s

Normal Created 13s kubelet Created container envoy

Normal Started 13s kubelet Started container envoyNow, we cannot access the containers directly. Ideally, we will be deploying a load-balancer to test the endpoints. But for our local testing, let’s port-forward to verify.

kubectl port-forward express-ssl 8080 8443 9901Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Forwarding from 127.0.0.1:8443 -> 8443

Forwarding from [::1]:8443 -> 8443

Forwarding from 127.0.0.1:9901 -> 9901

Forwarding from [::1]:9901 -> 9901Now when we try doing curl on HTTPS again

curl -s https://localhost:8443 --insecureWe get a proper response back from the server

You've hit express-sslAwesome !!! This means everything is working as expected.

So with this whole setup we saw how easy it is to setup a multi-node Kubernetes cluster with KinD locally. Next time you can test your deployment locally before pushing it to the actual cluster.

Hope you had fun reading this. Happy Coding !!!